Now, at those times look at the logs on the host OS for indicators of storage problems. Especially under load, the VM will see QID timeout messages, which are associated with a temporary pause of the VM kernel. Take a modern linux VM (kernel 5.4.92) configured with a virtual NVMe storage adapter. I think it's important to understand that the underlying storage backing on the host of the guest's virtual NVMe is probably irrelevant. I can confirm that the Workstation Pro 16 virtual NVMe interface leaves something to be desired. I saw someone stating that the scsi controller was better regarding performance, but I was wondering if someone is using the NVMe controller type on Windows guest / Linux host, with a dedicated entire disk, and if it works? I tried various kernel/module parameters tuning, to turn off power saving regarding pcie/nvme core driver, use IO schedulers, etc. => On intensive disk usage, I get "nvme: QID Timeout, aborting"

#VMWARE WORKSTATION PRO 16 TORRENT PLUS#

VUbuntu2004 => Running Ubuntu 20.04 LTS, Samsung NVMe SSD 870 Evo Plus entire physical disk using NVMe controller type. => On intensive disk usage, I get "nvme: QID Timeout, completion polled" VUbuntu1804 => Running Ubuntu 18.04 LTS, Western digital NVMe SSD WDS500 entire physical disk using NVMe controller type.

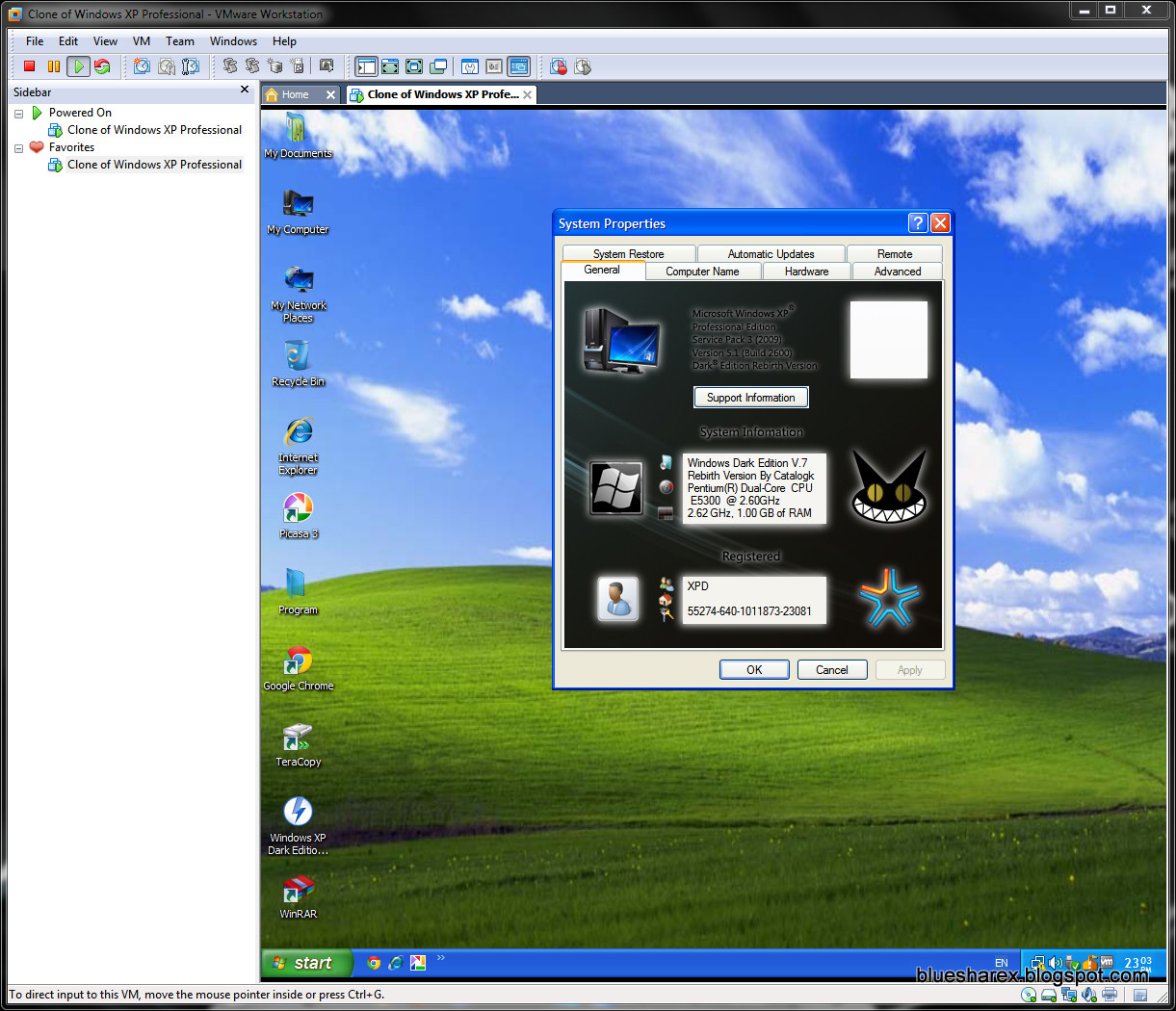

#VMWARE WORKSTATION PRO 16 TORRENT WINDOWS 10#

My host machine is Windows 10 Pro, running on AMD Ryzen 3800XT + NVMe SSD. I'm encountering NVme error in my guest Linux OS (nvme QID Timetouts) when I use a NVMe entire disk allocated to a virtual machine, I've made some tests, like this:

0 kommentar(er)

0 kommentar(er)